Selecting the Ideal Large Language Model (LLM) for Your Company: A Comprehensive Guide

Artificial intelligence (AI) and especially Large Language Models (LLMs) are becoming increasingly important in today’s business world with their potential to transform business processes and enhance efficiency. However, selecting the right LLM can be a complex process, depending on your company’s specific needs and goals. This comprehensive guide provides a step-by-step approach to help you find the perfect LLM for your company.

1. Define Your Purpose: What Do You Expect from an LLM?

The first step is to clearly define the purposes for which you want to use the LLM. Identify which business processes your company wants to automate and how the LLM will play a role in these processes.-

- Automation: By automating repetitive tasks such as answering customer inquiries, writing emails, summarizing documents, and scheduling appointments, you can save time and resources. LLMs can perform these tasks quickly and accurately, allowing your employees to focus on more strategic tasks.

-

- Text Generation: You can leverage LLMs to create marketing content, write product descriptions, summarize reports, or generate creative texts. LLMs can mimic different writing styles and create content suitable for your target audience.

-

- Data Analysis: LLMs can help you gain meaningful insights by analyzing large data sets. You can use the analytical capabilities of LLMs to identify market trends, understand customer behavior, and make data-driven decisions.

2. Determine Your Needs: LLM Capacity and Features

Determining the features that the LLM must have to achieve your defined goals is crucial for making the right selection.-

- Number of Parameters: Generally, models with more parameters can perform more complex tasks and produce more nuanced outputs. However, a higher number of parameters requires more processing power and memory.

-

- Token Length: The maximum number of tokens a model can process will determine whether you need to work with long texts. If you are working on long reports, articles, or books, you may need to choose a model with a higher token length.

-

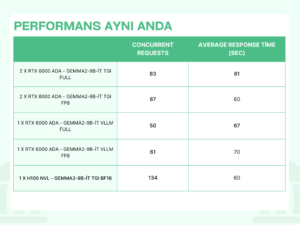

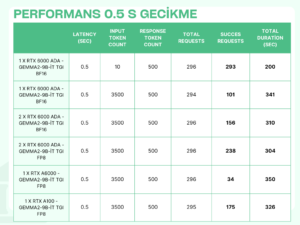

- Speed and Performance: For real-time applications, a model that can provide quick and accurate responses is critical. Especially in interactive applications such as chatbots and virtual assistants, latency directly impacts the user experience.

3. Licensing: Suitability for Commercial Use

LLMs come with different licenses, which will determine how you can commercially use the model. It is vital to ensure that the license of the chosen model is suitable for your commercial use. Carefully review the licensing terms and seek legal advice if necessary.4. Determine Hardware Requirements

The performance of LLMs depends on your hardware infrastructure. The size, complexity, and use case of the model will determine the required hardware resources. Insufficient hardware can lead to performance issues and delays.-

- Fast Responses or Complex Queries? Applications like chatbots, where speed is essential, require a low-latency, high-processing-capacity hardware infrastructure, while projects involving complex queries like big data analysis require high memory capacity and processing power.

-

- The number of simultaneous users directly affects the required hardware resources. More users require more processing power and memory.

5. On-Premises vs. Cloud: Hosting Options

You can choose to host your LLM on-premises or in the cloud. Both options have their advantages and disadvantages.-

- Control and Security: On-premises solutions provide full control over your data and models but require more maintenance and management. Cloud-based solutions transfer the responsibility of security and maintenance to the cloud provider.

-

- Cost: On-premises solutions require high upfront costs, while cloud-based solutions typically offer lower initial costs and scalable pricing models.

-

- Scalability and Flexibility: Cloud-based solutions allow you to scale resources quickly and easily according to your needs. On-premises solutions are more limited in terms of scalability.